Measuring developer productivity is contentious. Get it wrong, and you create surveillance culture, gaming, and attrition. Get it right, and you enable growth, remove obstacles, and demonstrate engineering value to the organization. This guide shows you how to approach productivity measurement ethically and effectively.

The key insight: productivity measurement should help developers succeed, not catch them failing. When engineers feel that metrics are used for them rather thanagainst them, resistance transforms into engagement.

If you are evaluating developer productivity tools, start with the metrics and behaviors you want to improve. The right developer metrics should make work easier, not just more measurable. Use our free developer productivity assessment to benchmark where your team stands today.

🔥 Our Take

The phrase "engineering productivity" is a trap. It implies engineers are like factory workers with countable outputs. They're not. Software development is creative knowledge work. Stop trying to measure it like an assembly line.

The "productivity" framing leads to bad metrics: lines of code, commit counts, story points completed. Better framing: "engineering effectiveness" or "delivery capability." You're not measuring how hard people work—you're measuring how well your system enables great work.

"The most important engineering work is unmeasurable: architecture decisions that prevented problems, mentorship that made someone better, documentation that saved hours of confusion."

The Productivity Paradox

Why Measuring Productivity Is Hard

Software development doesn't work like manufacturing. You can't simply count output because:

- Quality matters more than quantity: One elegant solution beats ten hacky workarounds

- Problems vary dramatically: Some tasks take hours, others take weeks

- Value isn't always visible: Prevention, maintenance, and architecture don't produce "output"

- Collaboration amplifies impact: Helping others succeed doesn't show up in individual metrics

The Measurement Trap

Whatever you measure, people optimize for. If you measure lines of code, you get verbose code. If you measure tickets closed, you get ticket-splitting. If you measure commits, you get tiny commits.

This isn't malice—it's human nature. People respond to incentives. The trap is thinking you can find metrics immune to gaming. You can't.

The LOC Problem

The fact that anyone still tracks lines of code in 2025 is embarrassing. In the age of AI coding assistants, it's not just useless—it's counterproductive. A developer who deletes 500 lines while maintaining functionality has done better work than one who adds 500.

LOC rewards verbosity, not value. With Copilot and other AI tools generating code, the metric is now literally meaningless. If you see lines of code on a productivity dashboard, run the other way—you're looking at a tool built by people who don't understand software.

The Alternative Approach

Instead of searching for "un-gameable" metrics, use metrics for insight rather than evaluation. Metrics become tools for conversation, not judgment. This removes the incentive to game and creates genuine value.

Output vs Outcome Metrics

Output Metrics (Use Carefully)

Output metrics measure activity: PRs merged, commits made, lines changed. They're easy to measure but dangerous to optimize for.

Appropriate uses:

- Trend analysis: Is this developer's output declining? (Might indicate blockers or burnout)

- Capacity planning: How much throughput can we expect from the team?

- Self-reflection: Developers reviewing their own patterns

Inappropriate uses:

- Performance ranking

- Compensation decisions

- Comparing individuals

Outcome Metrics (More Meaningful)

Outcome metrics measure results: customer impact, business goals achieved, problems solved. They're harder to measure but more meaningful.

Examples:

- Features shipped that drove measurable user engagement

- Bug fixes that reduced support tickets by X%

- Performance improvements that improved conversion

- Platform work that accelerated other teams

The challenge: connecting engineering work to business outcomes requires product analytics, not just Git data.

Balancing Both

A complete picture combines output indicators (are we producing work?) with outcome indicators (does the work matter?). Neither alone tells the full story.

What Research Says About Developer Productivity

The SPACE Framework

Microsoft Research's SPACE framework identifies five dimensions of productivity:

- Satisfaction and well-being: How developers feel about their work

- Performance: Outcomes of the developer's work

- Activity: Actions and outputs (the traditional focus)

- Communication and collaboration: How people work together

- Efficiency and flow: Ability to do work without interruption

Activity alone (what most metrics capture) is just one dimension. True productivity measurement includes subjective elements like satisfaction and flow.

Key Research Findings

- Context switching costs: Each interruption costs 20+ minutes. Reducing interruptions improves productivity more than working harder.

- Flow state matters: Developers in flow are dramatically more productive. Creating conditions for flow beats measuring output.

- Psychological safety: Teams where people feel safe taking risks and asking questions outperform teams that don't—regardless of individual talent.

- Quality and speed correlate: High-quality practices don't slow teams down—they speed them up by reducing rework.

Ethical Approaches to Productivity Measurement

Principle 1: Transparency

Everyone should know what's being measured and why. No secret dashboards. No metrics collected without disclosure.

Principle 2: Context Before Judgment

Numbers without context are meaningless. A developer with low output might be:

- Working on a hard problem

- Blocked by unclear requirements

- Doing valuable work that doesn't show up in metrics

- Having personal challenges

Always seek context before drawing conclusions.

Principle 3: Team-Level by Default

Focus metrics at the team level. Individual metrics should be for self-reflection and coaching, not evaluation. See our guide on team measurement.

Principle 4: Improvement Over Evaluation

Use metrics to identify obstacles and improve processes, not to rank or punish individuals.

Principle 5: Developer Access First

Let developers see their own data before managers do. Self-reflection is valuable and non-threatening. When developers own their data narrative, trust increases.

Metrics That Destroy Trust

Some metrics don't just fail to help—they actively damage your engineering culture. According to Haystack Analytics, 83% of developers suffer from burnout. And the 2024 Stack Overflow Developer Survey found that 80% of developers are unhappy in their jobs. Bad metrics contribute directly to both problems.

"If you're using individual developer metrics for performance reviews, you've already lost. You'll get exactly what you measure: gamed numbers and eroded trust."

The Trust-Destroying Metrics

These metrics signal to engineers that you don't understand their work:

- Lines of code: Rewards verbosity, punishes elegant solutions and refactoring

- Commit counts: Rewards splitting work artificially, punishes thoughtful batching

- Individual velocity rankings: Turns teammates into competitors, discourages helping others

- Time-at-desk or active hours: Surveillance theater that measures presence, not output

- Individual story points completed: Story points are for team capacity, not individual performance

The High Velocity Trap

High velocity is often a warning sign, not a success metric. Teams going "fast" are often just generating work, not shipping value. If your team's output doubled but customer satisfaction didn't budge, you optimized the wrong thing.

Velocity measures motion, not progress. When velocity becomes a goal, it stops being useful—you'll get more PRs, but they'll be smaller, simpler, and less valuable. You'll hit your numbers while missing your targets.

The "High Performer" Illusion

That developer with the most commits? They might just be breaking work into tiny pieces. The one with the fastest cycle time? They might be cherry-picking easy tasks. Git metrics tell you what happened, not who's valuable.

Visibility bias is real. The best engineers often do invisible work: architecture, mentoring, documentation, code review. If you only track what's countable, you'll undervalue what matters.

🔥 Our Take

The moment you compare Alice's cycle time to Bob's, you've turned teammates into competitors. Individual developer metrics destroy trust. Metrics are for understanding systems, not judging people.

Performance reviews should use metrics as conversation starters, not scorecards. When engineers are ranked against each other, collaboration dies. Why would Alice help Bob if it hurts her relative standing?

Team Productivity vs Individual Productivity

Why Team Metrics Work Better

Software is a team sport. Individual productivity metrics fail because:

- They ignore collaboration (which you want to encourage)

- They can be gamed (splitting work, cherry-picking easy tasks)

- They strip context (different roles, different complexity)

- They create competition instead of collaboration

Team metrics encourage helping each other, shared ownership, and collective problem-solving.

Healthy Team Metrics

- Team cycle time: How fast does the team ship?

- Team deployment frequency: How often does the team release?

- Team review coverage: Is code getting reviewed?

- Team quality indicators: Change failure rate, rework ratio

When Individual Data Is Appropriate

Individual data isn't inherently bad. Appropriate uses include:

- Self-reflection: Developers examining their own patterns

- 1:1 coaching: Manager and developer looking at data together to identify support needs

- Workload balancing: Identifying if someone is overloaded

- Recognition: Highlighting positive contributions through awards (see our developer awards guide)

Building a Productivity-Positive Culture

Focus on Obstacles, Not Output

Instead of asking "how do we get more output?", ask "what's slowing us down?" Common obstacles:

- Unclear requirements creating rework

- Slow code reviews blocking progress

- Too many meetings fragmenting focus time

- Technical debt making changes difficult

- Manual processes that could be automated

Removing obstacles improves productivity more than pushing harder.

"Burnout is a management failure, not a personal weakness. If your team is burning out, you failed to set boundaries they couldn't set themselves."

Create Conditions for Flow

Flow state—deep focus on challenging work—is when developers are most productive. Support flow by:

- Protecting focus time (no-meeting blocks)

- Reducing interruptions (async communication)

- Clear priorities (so people know what to work on)

- Autonomy (let developers choose how to solve problems)

Learn more in our context switching patterns guide.

Celebrate the Right Things

What you celebrate signals what you value. Celebrate:

- Problems solved, not just code shipped

- Help given to teammates

- Quality improvements

- Knowledge sharing

- Sustainable pace maintained

Don't celebrate:

- Heroic long hours

- Highest output volume

- Individual achievements that came at team expense

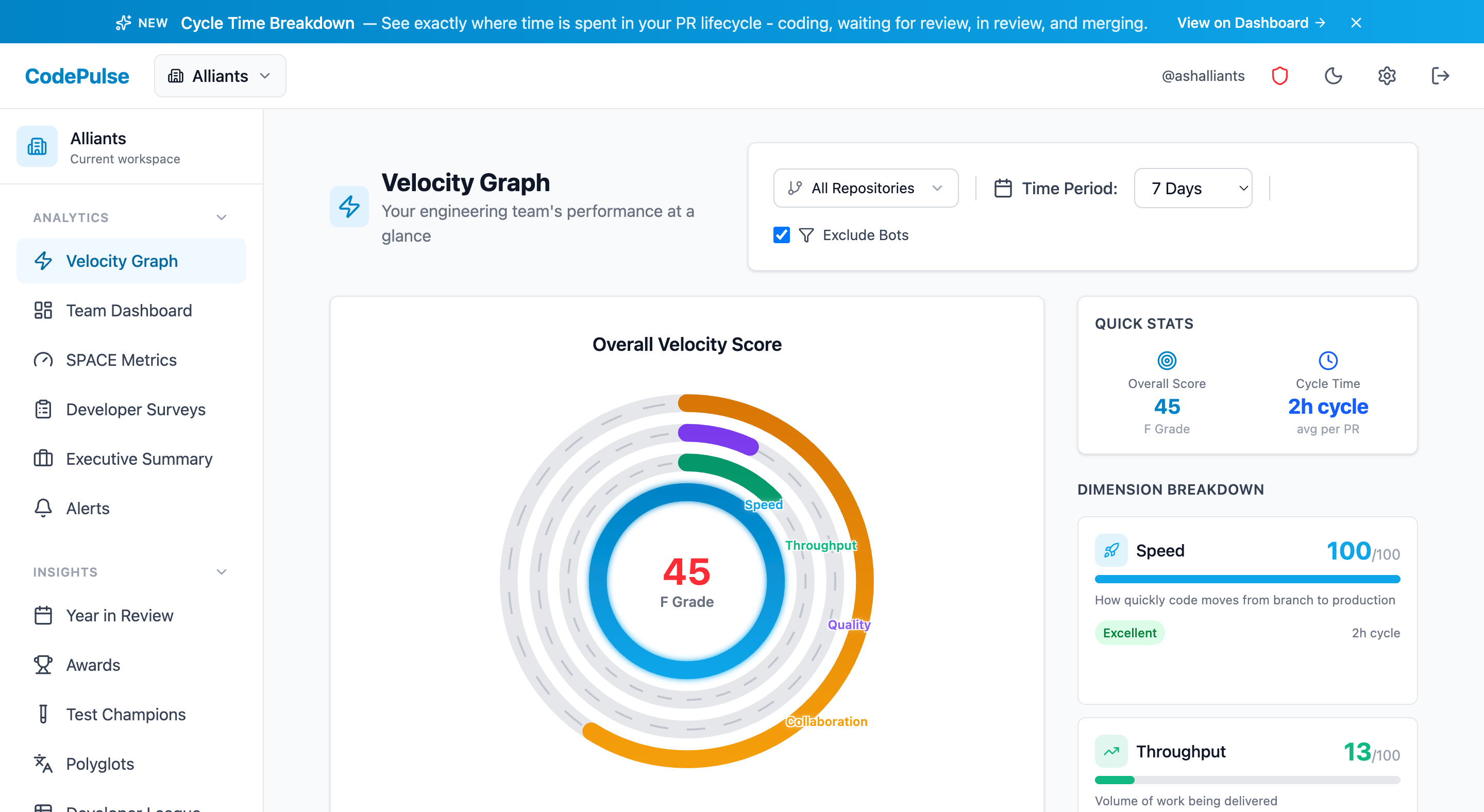

📊 How to See This in CodePulse

CodePulse helps build a healthy productivity culture:

- Dashboard - Team-level metrics with trend analysis

- Awards - Recognize collaboration and quality, not just output

- Review Network - See collaboration patterns and knowledge sharing

Getting Started with Ethical Productivity Measurement

Step 1: Clarify Purpose

Be explicit about why you're measuring. Good reasons:

- "We want to identify and remove obstacles"

- "We want to track improvement over time"

- "We want data to inform process experiments"

Bad reasons:

- "We want to know who's not working hard enough"

- "We need to justify the team to leadership"

Step 2: Start with Team Metrics

Begin with team-level DORA metrics: cycle time, deployment frequency, change failure rate. These provide insight without individual surveillance.

Step 3: Make Everything Transparent

Share what you're measuring and why. Let everyone see the same dashboards. Address concerns openly.

Step 4: Use for Conversation

Bring metrics into retrospectives and 1:1s as discussion prompts. "What does this trend tell us?" rather than "Why is this number low?"

Step 5: Iterate Based on Feedback

Ask your team regularly: "Are these metrics helping us improve?" Adjust based on what you learn.

💡 The Ultimate Test

Ask your developers: "Do you find our productivity metrics useful for improving how we work?" If the answer is no, you have more work to do. If the answer is yes, you've built something valuable.

Continue Your Productivity Journey

This guide focused on the philosophy and ethics of productivity measurement. For practical next steps, explore our related guides:

- What is Developer Productivity? — Understand the three dimensions of productivity and popular frameworks like DORA and SPACE

- How to Improve Developer Productivity — The 5 highest-impact interventions that actually work

- Developer Productivity Tools Guide — Compare tools across categories with honest trade-offs

- Developer Productivity Engineering (DPE) — How Netflix, Meta, and Spotify approach productivity systematically

See these insights for your team

CodePulse connects to your GitHub and shows you actionable engineering metrics in minutes. No complex setup required.

Free tier available. No credit card required.

Related Guides

What is Developer Productivity? (It's Not What You Think)

Developer productivity means sustainable delivery with team health—not lines of code. Learn the frameworks, dimensions, and what NOT to measure.

Improve Developer Productivity Without Burnout

A practical guide to improving developer productivity through friction reduction, not pressure. The 5 highest-impact interventions that actually work.

Developer Productivity Tools: The 2026 Buyer's Guide

Compare developer productivity tools across 5 categories: IDEs, AI assistants, analytics platforms, and more. Honest trade-offs included.

Developer Productivity Engineering: What DPE Does

Developer Productivity Engineering (DPE) is how Netflix, Meta, and Spotify keep engineers productive. Learn what DPE teams do and how to start one.