Pull request cycle time—the elapsed time from opening a PR to merging it—is one of the most impactful metrics for engineering team velocity. Teams with long cycle times ship slower, context-switch more, and often experience lower morale. The good news? Cycle time is highly improvable with the right focus.

This guide provides a practical playbook for reducing PR cycle time by 30% or more within 30 days. We'll cover how to identify bottlenecks, implement review SLAs, and sustain improvements over time.

🔥 Our Take

Cycle time is a signal, not a goal. If you're optimizing cycle time at the cost of review quality, you're optimizing the wrong thing.

Fast cycle time with no review is worse than slow cycle time with thorough review. The moment cycle time becomes a target—tracked on dashboards, tied to performance—teams will find ways to hit the number. They'll rubber-stamp approvals, skip meaningful feedback, and merge before they should. You'll get faster PRs and worse software. Cycle time tells you where friction exists. It doesn't tell you whether that friction is waste or valuable diligence.

Why PR Cycle Time Matters

Every hour a pull request sits waiting is an hour of delayed value delivery. But the impact goes beyond simple delays:

"A PR waiting for review is inventory sitting on a shelf. It depreciates. Conflicts accumulate. Context fades. The longer it sits, the less valuable the merge."

The Hidden Costs of Long Cycle Times

- Context switching: When developers wait days for review, they start other work. When feedback arrives, they must reload the original context— often taking 15-30 minutes per switch.

- Merge conflicts: The longer a branch lives, the more likely it will conflict with other changes. Resolving conflicts is pure waste.

- Larger batches: Slow review processes encourage developers to batch changes into larger PRs, which are harder to review and more likely to contain bugs.

- Reduced feedback quality: Reviewers faced with PRs that have been open for days feel pressure to approve quickly, reducing review thoroughness.

The Compounding Effect

If your team opens 20 PRs per week and each one takes 3 extra days to merge, that's 60 developer-days of work-in-progress sitting in queues. At any moment, your team is carrying the cognitive load of dozens of open branches.

Reducing cycle time doesn't just speed up individual PRs—it reduces the entire system's cognitive overhead and creates faster feedback loops throughout your development process.

Measuring Your Current Cycle Time

Before you can improve, you need to measure. Here's how to get accurate cycle time data from your GitHub repositories.

What to Measure

Total Cycle Time: Time from PR opened to PR merged. This is your headline metric.

Break it down into components:

- Time to First Review: How long until someone starts reviewing? This is often the largest component.

- Review Duration: Time spent actively in review (from first review to approval)

- Time to Merge After Approval: Delay between approval and actual merge

Establishing Baselines

Calculate your current metrics over the past 30-90 days:

- Average cycle time (mean)

- Median cycle time (often more useful—less skewed by outliers)

- 90th percentile cycle time (your "worst case" scenarios)

- Cycle time by team/repository (to identify specific problem areas)

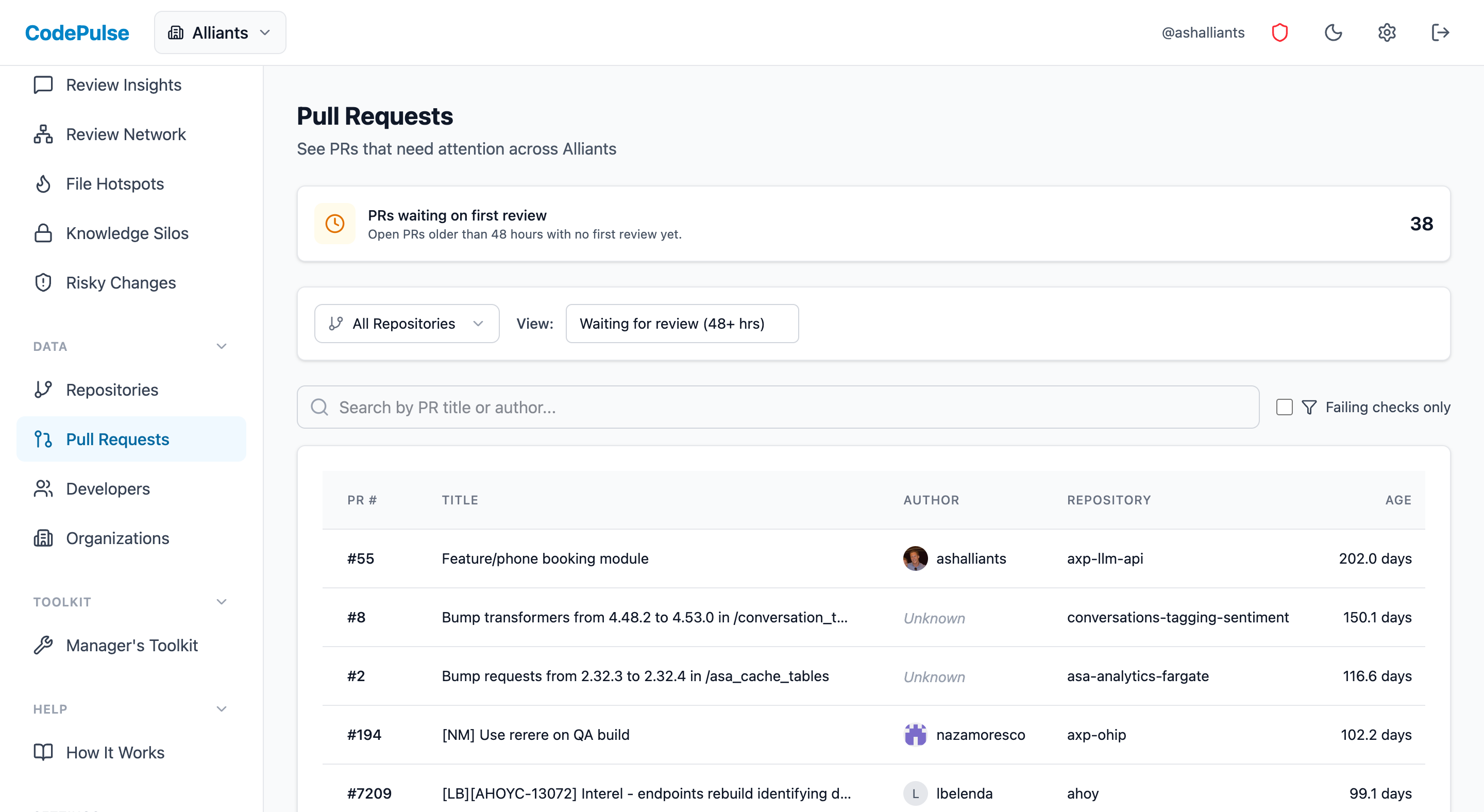

📊 How to See This in CodePulse

Navigate to Dashboard to view your cycle time breakdown:

- The cycle time chart shows your median and trend over time

- Hover over the breakdown to see the 4 components: coding time, wait for review, review duration, and merge time

- Filter by repository to identify which repos have the longest cycle times

- Switch between daily, weekly, and monthly views to spot patterns

Industry Benchmarks

According to the 2024 DORA State of DevOps Report, performance distribution has shifted—high performers shrank from 31% to 22%, while low performers grew from 17% to 25%. Lead time (which includes cycle time) is a key differentiator:

| Performance Level | PR Cycle Time |

|---|---|

| Elite | < 1 day |

| High | 1-3 days |

| Medium | 3-7 days |

| Low | > 7 days |

If your median cycle time is above 3 days, there's significant room for improvement. But here's what matters more: the DORA research found that psychological safety is among the strongest predictors of software delivery performance. Teams that feel safe to take risks, ask questions, and push back on unrealistic deadlines consistently outperform those optimizing purely for speed metrics.

Identifying Bottlenecks

Long cycle times always have specific causes. Here are the most common bottlenecks and how to identify them.

1. Review Queue Bottleneck

Symptom: Long time to first review, especially for certain repositories or teams.

Diagnosis: Check your review load distribution. If a small number of reviewers handle most reviews, you have a concentration problem. Look for:

- Single reviewers handling >20% of team reviews

- Repositories with only 1-2 qualified reviewers

- Senior engineers spending >4 hours/day on reviews

For strategies on distributing review load more evenly, see our Review Load Balancing Guide.

2. Large PR Bottleneck

Symptom: High variance in cycle time. Some PRs merge quickly; others take forever.

Diagnosis: Correlate PR size with cycle time. Research consistently shows that the optimal PR size is under 400 lines—larger PRs get superficial reviews because reviewers experience fatigue. Studies also indicate that PRs waiting longer than 24 hours have a 2x higher abandon rate. Check:

- Average PR size (additions + deletions)

- Percentage of PRs over 400 lines

- Cycle time segmented by PR size

"The best predictor of review quality isn't reviewer seniority—it's PR size. After 400 lines, reviewers stop reading and start skimming."

3. Timezone/Availability Bottleneck

Symptom: PRs opened late in the day take much longer to merge than those opened in the morning.

Diagnosis: Analyze cycle time by PR creation hour. If PRs created after 3pm consistently take 2x longer, your team may lack overlapping working hours for timely reviews. For distributed teams, see our guide on async code review for distributed teams.

4. CI/CD Bottleneck

Symptom: PRs approved but not merged for hours or days.

Diagnosis: Check time from approval to merge. If this is consistently high, look for:

- Slow CI pipelines blocking merge

- Flaky tests requiring reruns

- Manual deployment gates

When Slow is Actually Fine

Not every slow PR is a problem. Before you optimize cycle time into the ground, recognize when slower is better:

Legitimate Reasons for Longer Cycle Times

- Complex architectural changes: A PR that redesigns your auth system should take longer than a typo fix. If reviewers are asking hard questions and requesting design changes, that's the review process working.

- Cross-team coordination: Changes affecting multiple teams need input from multiple stakeholders. This isn't a bottleneck—it's appropriate governance.

- Security-sensitive code: Payment processing, authentication, encryption—these deserve extra scrutiny even if it adds days.

- Junior developer growth: A PR where a senior spends 3 hours mentoring a junior through improvements isn't "slow"—it's investment.

- First-time contributors: New team members need more thorough reviews to learn the codebase. Their cycle time will naturally be higher.

"If every PR merges in under 4 hours, you're either a team of clones or you've stopped giving meaningful feedback."

The Tradeoff Matrix

| PR Type | Target Cycle Time | Acceptable Variance |

|---|---|---|

| Bug fixes, typos | < 4 hours | Low—these should be fast |

| Feature additions | 1-2 days | Medium—depends on scope |

| Refactors, tech debt | 2-3 days | Higher—benefits from deliberation |

| Architecture changes | 3-5 days | High—speed here is risky |

| Security-sensitive | 2-5 days | Very high—thoroughness trumps speed |

When reviewing your cycle time metrics, segment by PR type. A 4-day architecture PR and a 4-day typo fix are very different situations.

Questions to Ask Before Optimizing

- Are PRs slow because of waste (waiting) or value (thoughtful review)?

- Would faster merges have prevented any incidents, or caused them?

- Are your best engineers complaining about review delays, or about pressure to approve too quickly?

- When a PR takes longer, does it emerge better?

If the honest answer is that your slow PRs are slow because they're getting better, you may not have a problem worth solving. Focus your optimization efforts on the PRs that are slow due to neglect, not thoroughness.

Review SLA Best Practices

Service Level Agreements for code review transform review from "when I get to it" to an expected team responsibility.

Setting the Right SLA

Recommended starting points:

Code Review SLA Framework: ┌─────────────────────────────────────────────────────────┐ │ PR SIZE │ FIRST RESPONSE │ TOTAL CYCLE TIME │ ├─────────────────────────────────────────────────────────┤ │ Small (<100L) │ 2 hours │ Same day merge │ │ Medium (<400L) │ 4 hours │ 24 hours │ │ Large (>400L) │ 8 hours │ 48 hours (review *) │ └─────────────────────────────────────────────────────────┘ * Large PRs should trigger a conversation about breaking them down, not just longer SLAs. Follow-up Response SLA: 2 hours after author updates ESCALATION PATH: - Yellow: PR at 75% of SLA → Slack reminder to reviewers - Red: PR at 100% of SLA → Escalate to team lead - Critical: PR at 200% of SLA → Auto-tag EM for review

Important: SLAs should be team commitments, not individual mandates.

Implementing SLA Enforcement

Visibility: Make SLA adherence visible. Dashboard showing "PRs approaching SLA" and "PRs past SLA" creates healthy accountability.

Alerts: Set up automated alerts when PRs approach or exceed SLA thresholds. Slack notifications work well—send to the team channel, not individuals. See our guide on setting up engineering metric alerts for detailed configuration advice.

Escalation: For PRs significantly past SLA, have a clear escalation path. The author should be empowered to ping reviewers directly without feeling awkward.

Avoiding SLA Anti-Patterns

- Don't punish individuals: If someone consistently misses SLAs, investigate their workload—don't blame them.

- Don't sacrifice quality: An SLA that leads to rubber-stamp approvals defeats the purpose.

- Don't ignore context: Complex PRs may legitimately need more time. SLAs should be guidelines, not absolutes.

30-Day Implementation Plan

Week 1: Measure and Baseline

- Day 1-2: Set up cycle time tracking. Calculate current averages and identify your biggest repositories/teams.

- Day 3-4: Break down cycle time into components. Identify which phase (waiting for review, in review, waiting to merge) takes longest.

- Day 5: Share findings with team leads. Get buy-in that this is worth improving.

Week 2: Quick Wins

- Day 6-7: Implement review rotation or "review champion" role. Ensure someone is always responsible for reviewing incoming PRs.

- Day 8-9: Set up basic alerts for PRs waiting >4 hours for first review.

- Day 10: Communicate new review SLA expectations to the team. Make it a team commitment, not a top-down mandate.

Week 3: Address Root Causes

- Day 11-14: If large PRs are an issue, establish PR size guidelines and provide training on breaking down work.

- Day 15-17: If review concentration is an issue, begin cross-training to expand your reviewer pool.

- Day 18-21: If CI is slow, prioritize pipeline optimization or add caching.

Week 4: Measure and Iterate

- Day 22-25: Measure new cycle times. Compare to baseline.

- Day 26-28: Identify remaining bottlenecks. Adjust SLAs or processes as needed.

- Day 29-30: Share results with the team. Celebrate improvements. Set targets for the next 30 days.

Sustaining Improvements

Initial improvements often fade without ongoing attention. Here's how to make faster cycle times stick.

Make Metrics Visible

Display cycle time metrics in a shared dashboard. Include it in sprint retrospectives. What gets measured and discussed gets improved.

Celebrate Progress

When cycle time improves, acknowledge it publicly. "We shipped 40% faster this month" is motivating. Teams that see the impact of their efforts continue investing in process improvement.

Regular Review of Outliers

Weekly, review PRs that took significantly longer than average. Understanding why specific PRs got stuck helps prevent future occurrences.

Continuous Improvement Mindset

Once you've achieved your 30% reduction, don't stop. Elite teams continuously optimize. Set new targets, identify new bottlenecks, and keep improving. For a deeper dive into the components of cycle time, see our Cycle Time Breakdown Guide.

Protect Against Backsliding

Set up alerts if cycle time trends upward. Catch regressions early before they become the new normal. See our Alert Rules Guide for configuration best practices.

Final Thoughts

Cycle time improvement is not about making every PR merge faster. It's about removing unnecessary friction while preserving valuable friction. The goal is not a leaderboard of fast mergers—it's a team that ships quality code with minimal waste.

Remember: the teams with the best cycle times didn't get there by optimizing cycle time. They got there by building trust, reducing batch sizes, automating the boring parts, and creating a culture where review is a shared responsibility, not a bottleneck.

If you take one thing from this guide, let it be this: measure cycle time to understand your process, not to judge your people. Use it as a diagnostic tool, not a performance metric. The moment you turn it into a target, you'll get faster numbers and worse software.

For more context on how to think about engineering metrics holistically, see our DORA Metrics Guide and our guide on measuring team performance without micromanaging.

See these insights for your team

CodePulse connects to your GitHub and shows you actionable engineering metrics in minutes. No complex setup required.

Free tier available. No credit card required.

Related Guides

DORA Metrics Are Being Weaponized. Here's the Fix

DORA metrics were designed for research, not management. Learn how to use them correctly as signals for improvement, not targets to game.

The 4-Minute Diagnosis That Reveals Why Your PRs Are Stuck

Learn to diagnose exactly where your PRs are getting stuck by understanding the 4 components of cycle time and how to improve each one.

Engineering Metrics That Won't Get You Reported to HR

An opinionated guide to implementing engineering metrics that build trust. Includes the Visibility Bias Framework, practical do/don't guidance, and a 30-day action plan.

Your Best Engineer Is About to Quit (Check Review Load)

Learn how to identify overloaded reviewers, distribute review work equitably, and maintain review quality without burning out your senior engineers.